转:Windows下利用Cygwin搭建C/C++开发环境GCC

https://blog.csdn.net/sqlaowen/article/details/54645241

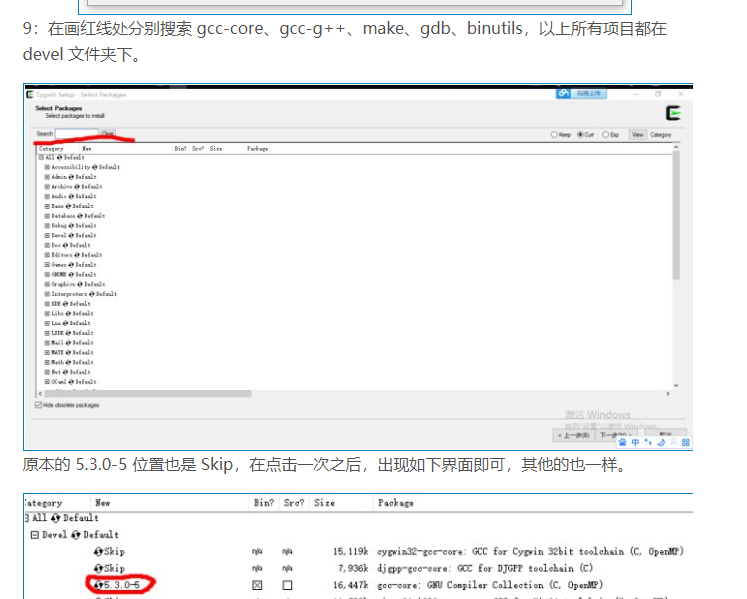

在画红线处分别搜索 gcc-core、gcc-g++、make、gdb、binutils,以上所有项目都在 devel 文件夹下

安装纯msys的开发环境

最简单的方法是直接登录:https://www.msys2.org/网址,下载安装包。

————————————————————————————————

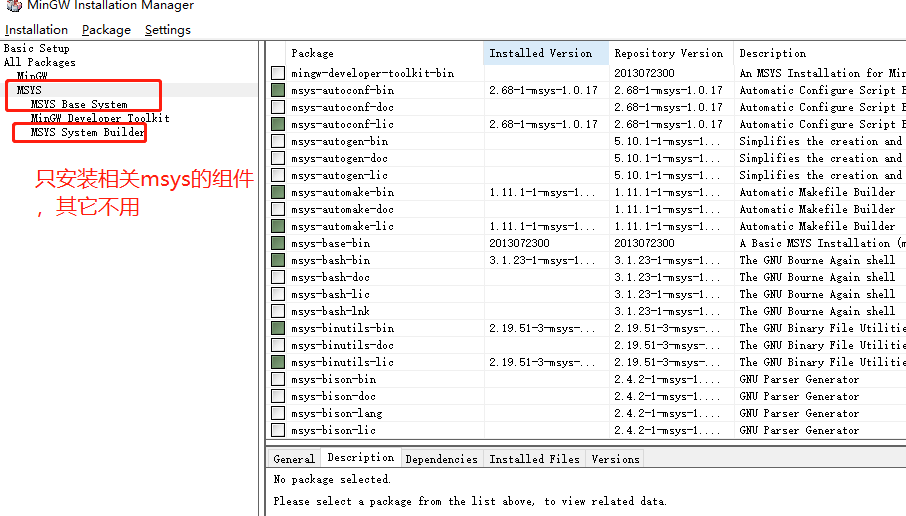

通常使用mingw来进行跨平台移植,如果源代码只依赖到linux核心的话,可以只需要安装msys核心组件即可。

例如lrzsz组件,可以只依赖msys的相关工具,即可。

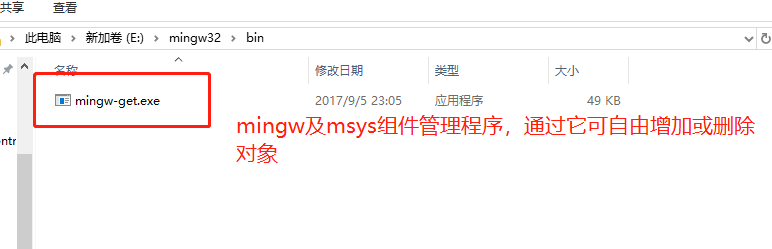

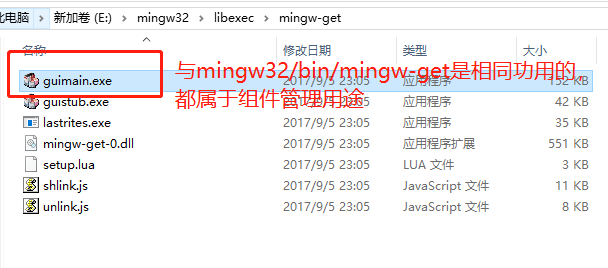

1.安装组件管理工具,【只需要安装mingw-w32-install即其管理工具在如下目录】

2.选择msys的组件

无阻塞channel

ch := make(chan int, 10)

go func() {

for{

<-ch

}

}()

for i := 0; i < 100; i++ {

select {

case ch <- i: // thank goodness

log.Println(fmt.Sprintf("msgchan:%v", i))

break

default: // hm, push i to storage?

log.Println(fmt.Sprintf("default:%v", i))

break

}

time.Sleep(time.Microsecond)

}

GoLang处理数组

//query='[{"client_ver":"10.0.0.5","plug_name":"Kaiwpp","plug_ver":"1.0.0.5","distsrc":"student"}]'

var params []interface{}

err := json.Unmarshal([]byte(query), ¶ms);

WIKI的docker部署

1.Dockerfiles编写

FROM centos:6.6

ENV CONF_INST /opt/atlassian/

ENV CONF_HOME /var/atlassian/application-data/

COPY ./confluence-5.4.4.tar.gz /confluence-5.4.4.tar.gz

COPY ./application-data-init.tar.gz /application-data-init.tar.gz

RUN set -x && yum install -y tar && mkdir -p ${CONF_INST} && tar -xvf /confluence-5.4.4.tar.gz --directory "${CONF_INST}/"

COPY ./startup.sh /startup.sh

RUN chmod +x /startup.sh

EXPOSE 8090

VOLUME ["${CONF_HOME}", "${CONF_INST}"]

CMD ["/startup.sh"]

2.docker-compose.yml的编写

version: '3.1'

services:

confluence:

image: wiki:1.0

restart: always

ports:

- 8090:8090

#entrypoint: bash -c "ping 127.0.0.1"

#command: bash -c "ping 127.0.0.1"

#command: /opt/atlassian/confluence/bin/catalina.sh run

volumes:

- /data/atlassian/confluence/logs:/opt/atlassian/confluence/logs

- /data/atlassian/confluence/logs:/opt/atlassian/application-data/confluence/logs

- /data/atlassian/application-data:/var/atlassian/application-data

- ./backups:/var/atlassian/application-data/confluence/backups

- ./restore:/var/atlassian/application-data/confluence/restore:ro

- /etc/localtime:/etc/localtime:ro

- /etc/timezone:/etc/timezone:ro

build:

context: ./crack

dockerfile: Dockerfile

ansible的安装与运行

1.采用EPEL-release源安装,简单安全。

yum install epel-release -y

yum install ansible –y

2.验证

ansible 127.0.0.1 -m ping

---------------------

返回结果

127.0.0.1 | SUCCESS => {

"changed": false,

"ping": "pong"

}

example:

https://github.com/leucos/ansible-tuto

ES自动清理过期索引

转载别人的:https://www.jianshu.com/p/f4af986ca164

注:基于别人脚本上,进行针对修改。所以若直接使用清理脚本,请认真看清你的脚本是否是以下形式。如果不是,则脚本需要做适应性调整。

1.编写清理脚本

仅支持这种形式的索引清理

green open k8s-stdout-2018.11.01 PHEIz5NXSw-ljRvOP1cj9Q 5 1 3748246 0 3.2gb 1.6gb

green open recom-nginxacclog-2018.10.27 jl5ZBPHsQBifN0-pXEp_yw 5 1 52743481 0 20.8gb 10.4gb

green open nginx-json-acclog-2018.11.09 r6jsGcHWRV6RP2mY6zFxDA 5 1 95681 0 50.4mb 25.1mb

green open watcher_alarms-2018.11.09 r_GS_GQGRoCgyOgiCFCuQw 5 1 24 0 323.4kb 161.7kb

green open .monitoring-es-6-2018.11.12 o8H2S-iERnuIAWQT7wuBRA 1 1 4842 0 3.2mb 1.6mb

green open client-nginxacclog-2018.11.02 FxXdLPpiSnuBtrJOB2BRsw 5 1 179046160 0 220.8gb 110.3gb

green open k8s-stderr-2018.11.16 Vggw7iYCQ6OHtW-sphgGrA 5 1 68546 0 20.4mb 10.2mb

green open k8s-stderr-2018.10.21 ZCeZZFRWSVyyYDKMjzPnbw 5 1 15454 0 5.3mb 2.6mb

green open watcher_alarms-2018.10.30 VqrbMbnuQ3ChPysgnwgm2w 5 1 44 0 371kb 185.5kb

#!/bin/bash

######################################################

# $Name: clean_es_index.sh

# $Version: v1.0

# $Function: clean es log index

# $Author: sjt

# $Create Date: 2018-05-14

# $Description: shell

######################################################

#本文未加文件锁,需要的可以加

#脚本的日志文件路径

CLEAN_LOG="/root/clean_es_index.log"

#索引前缀

INDEX_PRFIX=$1

DELTIME=$2

if [ "$DELTIME"x = ""x ]; then

DELTIME=30

fi

echo "ready to delete index $DELTIME ago!!!"

#elasticsearch 的主机ip及端口

SERVER_PORT=10.2.1.2:9200

#取出已有的索引信息

if [ "$INDEX_PRFIX"x = ""x ]; then

echo "curl -s \"${SERVER_PORT}/_cat/indices?v\""

INDEXS=$(curl -s "${SERVER_PORT}/_cat/indices?v" |awk '{print $3}')

else

echo " curl -s \"${SERVER_PORT}/_cat/indices?v\""

INDEXS=$(curl -s "${SERVER_PORT}/_cat/indices?v" |grep "${INDEX_PRFIX}"|awk '{print $3}')

fi

#删除多少天以前的日志,假设输入10,意味着10天前的日志都将会被删除

# seconds since 1970-01-01 00:00:00 seconds

SECONDS=$(date -d "$(date +%F) -${DELTIME} days" +%s)

#判断日志文件是否存在,不存在需要创建。

if [ ! -f "${CLEAN_LOG}" ]

then

touch "${CLEAN_LOG}"

fi

#删除指定日期索引

echo "----------------------------clean time is $(date +%Y-%m-%d_%H:%M:%S) ------------------------------" >>${CLEAN_LOG}

for del_index in ${INDEXS}

do

indexDate=$( echo ${del_index} | awk -F '-' '{print $NF}' )

# echo "${del_index}"

format_date=$(echo ${indexDate}| sed 's/\.//g')

#根据索引的名称的长度进行切割,不同长度的索引在这里需要进行对应的修改

indexSecond=$( date -d ${format_date} +%s )

#echo "$SECONDS - $indexSecond = $(( $SECONDS - $indexSecond ))"

if [ "$SECONDS" -gt "$indexSecond" ]; then

echo "it will delete ${del_index}......."

echo "${del_index}" >> ${CLEAN_LOG}

#取出删除索引的返回结果

delResult=`curl -s -XDELETE "${SERVER_PORT}/"${del_index}"?pretty" |sed -n '2p'`

#写入日志

echo "clean time is $(date)" >>${CLEAN_LOG}

echo "delResult is ${delResult}" >>${CLEAN_LOG}

fi

done

2.加入crontab任务

# 进入crontab编辑模式

crontab -e

# 在crontab编辑模式中输入

10 1 * * * sh /app/elk/es/es-index-clear.sh > /dev/null 2>&1

kafka的数据消费

log.retention.hours=48 #数据最多保存48小时,默认是7天

log.retention.bytes=1073741824 #数据最多1G,默认是不限制大小。

logstash的简易测试

stdout{codec=>rubydebug{}}

logstash的ruby使用

在logstash.conf配置中,可以使用ruby动态修改某个字段数据。

filter {

if [type] == "deployment" {

drop {}

}

mutate {

remove_field => ["kafka"]

}

ruby {

code => "

timestamp = event.get('@timestamp') #从字段中获取@timestamp字段,

localtime = timestamp.time + 28800 #加上8个小时偏差

localtimeStr = localtime.strftime('%Y.%m.%d')

event.set('localtime', localtimeStr) #保存最新时间

"

}

}

filter {

grok {

#match => {"message"=>'(?\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}) - (?(\s+)|-) (?.*) "(?.*?) (?.*?)\?d=(?.*?) (?\S+)" (?\d+) (?\d+) "(?.*?)" "(?.*?)" "(?.*?)'}

#match => {"message"=>'(?\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}) - (?(\s+)|-) (?.*) "(?.*?) (?.*?)\?d=(?.*?) (?\S+)" (?\d+) (?\d+) "(?.*?)" "(?.*?)".*?'}

match => {"message"=>'(?\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}) - (?(\s+)|-) \[(?.*)\] "(?.*?) (?.*?)\?(d=)?(?.*?) (?\S+)" (?\d+) (?\d+) "(?.*?)" "(?.*?)".*?'}

}

if [tags]{

drop {}

}

if [status] != "200" {

drop {}

}

ruby {

init => "require 'base64'"

code => "

string = event.get('string')

if string

begin

b64 = Base64.decode64(string).force_encoding('utf-8')

#puts b64, event.get('message')

event.set('b64_decode', b64)

rescue ArgumentError

event.set('b64_decode', '')

end

else

event.set('b64_decode', '')

end

"

}

if [b64_decode == ""]{

drop {}

}

kv {

source => "b64_decode"

field_split => "&?"

value_split => "="

}

if [type] == "template" {

mutate {

remove_field => ["@timestamp", "@version", "b64_decode", "message", "string", "body_bytes_sent", "timelocal", "http_user_agent", "http_referer", "status", "protocol", "uri", "ver", "remote_user", "remote_addr", "host", "method", "path"]

}

} else {

date {

match => ["timelocal", "dd/MMM/yyyy:HH:mm:ss Z"]

target => "@timestamp"

}

mutate {

remove_field => ["@version", "b64_decode", "message", "string", "body_bytes_sent", "timelocal", "http_user_agent", "http_referer", "status", "protocol", "uri", "ver", "remote_user", "remote_addr", "host", "method", "path"]

}

}

}